The Wolf Onboard

Not so many updates recently as I’ve been working my arse off on quite a large project – not to mention moving and getting married!

The past few months has seen me working in collaboration with Galina Rin of the alternative rock/metal act Death Ingloria. A few months ago I did a lyric video for her after discussing our joint desire to create videos for each song on an album. I was creating videos for Khaidian and she was in the process of getting artists and animators to work on the ‘art’ portions of her project. Once I completed the video for Silent Running Engaged, a collaboration using the artwork of Anne Bengard, I got to work on the main work for Rin.

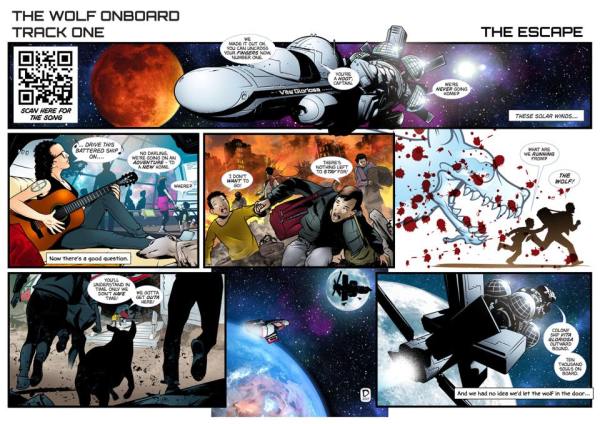

The main concept for the videos is using a comic that Rin produced in collaboration with 2000AD writer Hilary Robinson and artist Nigel Dobbyn. A tale of the fall of mankind thanks to genetic engineering and a malevolent A.I. the story follows the album that Death Ingloria has produced in the form of “The Wolf Onboard”. Despite the band being a one person outfit, the final result is a surprisingly cohesive beast, and it was my job to bring Dobbyn’s illustrations to life.

This has meant that I’ve taken each of the seven pages of Dobbyn’s work, split them into layers and recreated backgrounds, elements and figures that are key to the story. Using both the puppet tool and DuIK I have animated the figures so as to have some dynamic as they appear within the action. Some pages have been a challenge as they are relatively stark, meaning that some inventive use of the camera has been a necessity, along with inventive placement of the lyrics.

On top of all the animation involved for the actual releases, I am also producing live versions for her live performance. Using a round projection screen that has a back projection from a short throw projector. The videos are masked into a circle so as to fit the screen, but this has meant some editing of original camera angles and positions. I have a tendency to place key items to the side of the frame, so an adjustment of this was vital. Also we decided to have some lyrics placed within the video, which has meant some additional thinking about timing, size and placement. Live I have been running Resolume Arena with a main Mac controlling the timing via midi. Initially to use it for projection mapping and masking onto the screen, but given that Rin will eventually be running without this setup from one Mac, I decided to simply mask off the projection at the rendering stage.

You can find more information on Death Ingloria here:

facebook.com/deathingloria

patreon.com/deathingloria

Death Ingloria’s Album launch for “The Wolf Onboard” is on the 16th November 2017 at The New Cross Inn, London. EVENT LINK

A Case of Fours

It’s taken several months to complete this as there were a lot of storyboard elements and a very definite sense of what happened in Andre Chikatilo’s life. I didn’t want to stray too far from the actual life of Chikatilo and his absolutely crazy story, so I used it as a basis for the flow of the video.

A Case of Fours is based on the prolific serial killer Andrei Chikatilo a Soviet serial killer, nicknamed the Butcher of Rostov, the Red Ripper, and the Rostov Ripper, who committed the sexual assault, murder, and mutilation of at least 52 women and children between 1978 and 1990 in the Russian SFSR, the Ukrainian SSR and the Uzbek SSR. Chikatilo confessed to a total of 56 murders and was tried for 53 of these killings in April 1992. He was convicted and sentenced to death for 52 of these murders in October 1992 and subsequently executed in February 1994.

Chikatilo was known by such titles as the Rostov Ripper and the Butcher of Rostov because the majority of his murders were committed in the Rostov Oblast of the Russian SFSR.

Made with After Effects, Vue, Daz studio and Red Giant plugins.

Black Rose Entertainment

I just spent the last couple of months filming and putting together a series of videos for Showgirl Troupe, Black Rose Entertainment. These guys are lovely and exceptionally talented, so well worth your time.

I did want to go a little further with all this, but time was against us. I filmed some footage using the RGBD toolkit but didn’t manage to get any into the final edits. Possibly something to use for later?

The choreography was originally to different music, such as Rhianna and music from Moulin Rouge. Due to copyright reasons and wanting a sense of uniqueness I decided to write three new songs specifically for the videos. It was a slight challenge to make the choreography match to new music, in particular the final video which incorporates all four dances and some fire work from Alice.

Videogame music video

Ok, another idea: create a video game that doubles as a music video. It doesn’t need to be super flashy, in fact, if it has a retro feel that may be even better. Open it up to people on mobile platforms and there’s a chance it might do something a little more viral than just the usual kind of video.

Seems like a couple of people have already explored this hybrid genre:

Looks like it might be possible to create something in a program called Gamemaker. it’s a pretty interesting way of creating stuff and may be a good way of developing something retro and simple at some speed.

Prisma

So over the last few months I’ve noticed people using a new app on their photos that turns them into the closest approximation of a painting that I’ve seen from an app yet.

Cool, so how do I turn it into video?

Looks like someone already figured it out:

This is basically a video turned into frames and then each frame is processed by Prisma. Once completed, the frames are then reconstructed as a sequence.

I kinda like the first person point of view here. I wasn’t thinking this first of all, possibly just a camera following someone. Got some ideas along the lines of: protagonist travelling somewhere possibly being followed – the first person adds to the paranoia.

Brain squeezings: suddenly holding soil. face paint – looks freaky in prisma.

v.1

Some more thoughts

Here’s Linking Park’s video where they use similar technology. In the Khaidian video of Martyrdom, it looks slightly block because I’ve been forced to only do full body shots of the band (due to the 360 degree view), on a low resolution camera. The Kinect v.1 is low res compared to the newer Kinect for Xbox one, which would have been great and greatly improved the clarity of image, but the limitations of RGBDToolkit mean I’m limited to the first iteration.

https://www.fxguide.com/featured/beautiful-glitches-the-making-of-linkin-parks-new-music-vid/

Interestingly, I may end up using the beta for ‘Depthkit’, which uses the Kinect v.2 which would be really interesting to use.

Chagall

Really interesting use of motion control. Rather than the Leap Motion, using Mi-Mu gloves.

She’s playing soon I think…